At small scale, infrastructure is forgiving.

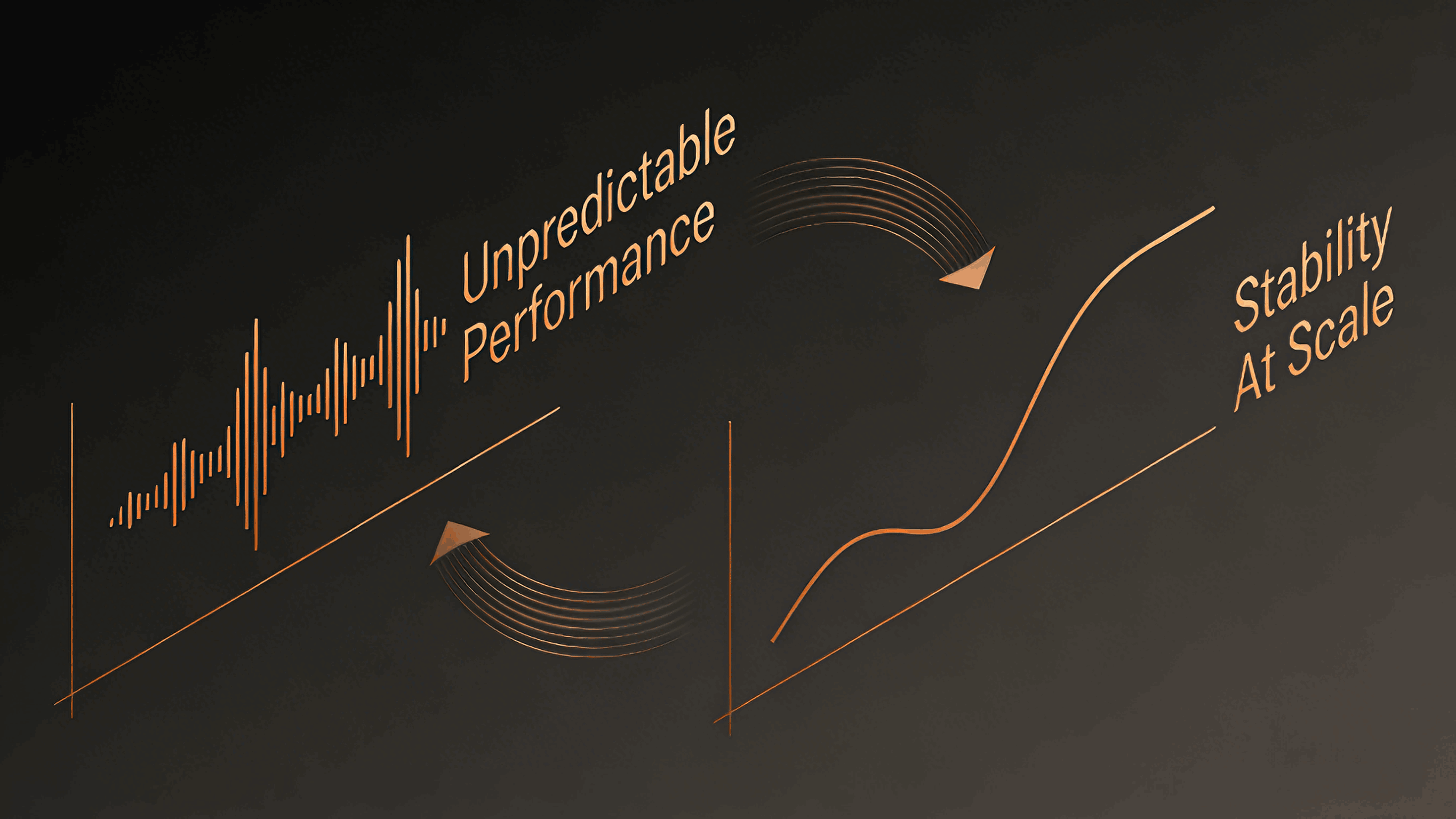

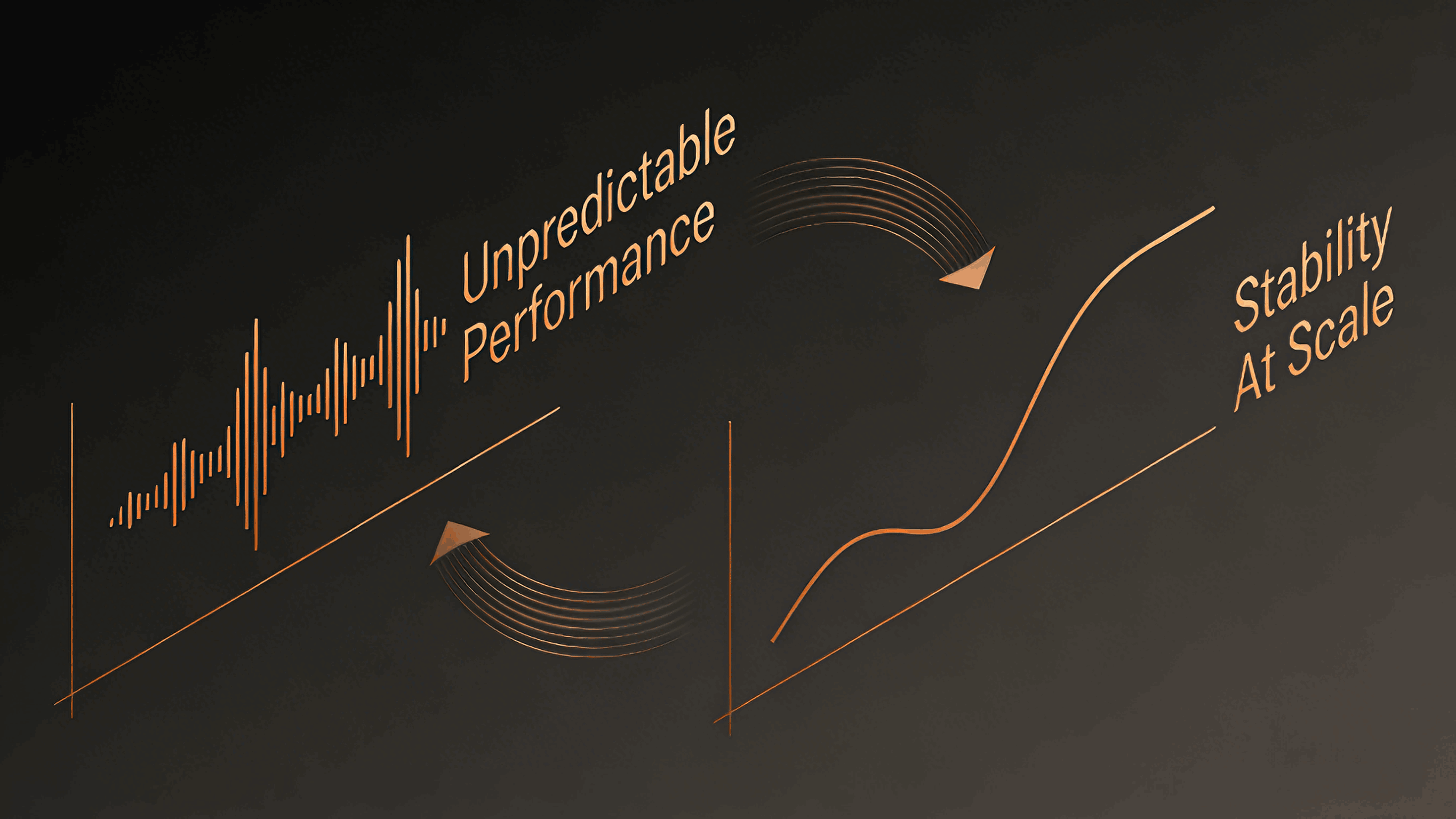

At data center scale, variance becomes the enemy.

Many teams experience a familiar pattern:

A 10-server pilot runs flawlessly

A 50-node expansion introduces minor quirks

At 500 or 1,000 servers, “identical systems” begin behaving differently

What changed was not the architecture.

It was component variance amplified by scale.

Why Small Deployments Hide Big Problems

In a lab or pilot environment:

Manual fixes are acceptable

One-off BIOS tweaks go unnoticed

Performance outliers are dismissed as noise

At 10 servers, variance is manageable.

At 1,000 servers, it becomes systemic.

Every undocumented difference compounds:

Scale does not create problems — it exposes them.

The Myth of “Same SKU, Same System”

From a purchasing perspective, everything looks consistent:

From an operational perspective, reality diverges:

Some nodes negotiate PCIe differently

Some throttle earlier under sustained load

Some exhibit intermittent I/O latency spikes

Some fail only under specific traffic patterns

The root cause is rarely a single defective part.

It is uncontrolled component diversity inside a single SKU.

Where Component Variance Hits First

1. Performance Predictability

Schedulers assume uniform capacity.

Component variance breaks that assumption.

Result:

2. Stability and Reliability

Marginal differences remain invisible — until stress accumulates.

Result:

Intermittent faults that resist reproduction

Failures that appear “random”

Escalation cycles without clear root cause

3. Validation and Deployment Velocity

Every untracked variant expands the test matrix.

Result:

4. Incident Response and RCA

Without component traceability, diagnosis turns speculative.

Result:

Why Data Centers Demand Consistency, Not Flexibility

At scale, flexibility is often mistaken for robustness.

Data center operators prioritize:

Fewer hardware permutations

Strict configuration baselines

Repeatable outcomes over optional features

Because:

Every additional component variant multiplies operational risk.

Consistency is not a limitation — it is an optimization strategy.

The Architectural Shift: From Compatibility to Consistency

Modern infrastructure teams are moving away from asking:

“Is this component compatible?”

And toward:

“Is this component behaviorally identical at scale?”

That shift requires:

Locked component versions

Pre-validated configuration sets

Explicit acceptance of limited variability

Strong collaboration between architecture, sourcing, and manufacturing

Lessons Learned from Scaling Failures

Teams that scale successfully share common practices:

They define hardware baselines as architectural artifacts

They treat component variance as a design constraint

They validate systems as ensembles, not parts

They resist silent substitutions, even when specs match

These teams debug less — and scale faster.

Final Thought

Scaling from 10 to 1,000 servers is not a linear process.

It is a transition from tolerance to discipline.

When component variance is left unmanaged, system consistency collapses under scale.

When it is controlled, scale becomes predictable.

In data centers, consistency is the true performance multiplier.