The Hidden Interaction Between Thermals, VRM Behavior, Turbo Modes, and High-Concurrency I/O

When a server throws critical errors only at night, most engineers assume coincidence.

In reality, these seemingly unpredictable failures often stem from thermal envelopes, VRM load behavior, I/O concurrency patterns, and CPU frequency scaling—interactions that only become visible under very specific operational windows.

At Shenzhen Angxun Technology Co., Ltd.—a 24-year ODM/OEM manufacturer focused on server motherboards, industrial motherboards, desktop boards, Mini PCs, and embedded platforms—our engineering team has investigated thousands of real-world field failures. The “night-only” failure is among the most common and misunderstood patterns.

This article breaks down the engineering logic behind these issues and provides a framework to diagnose and eliminate them.

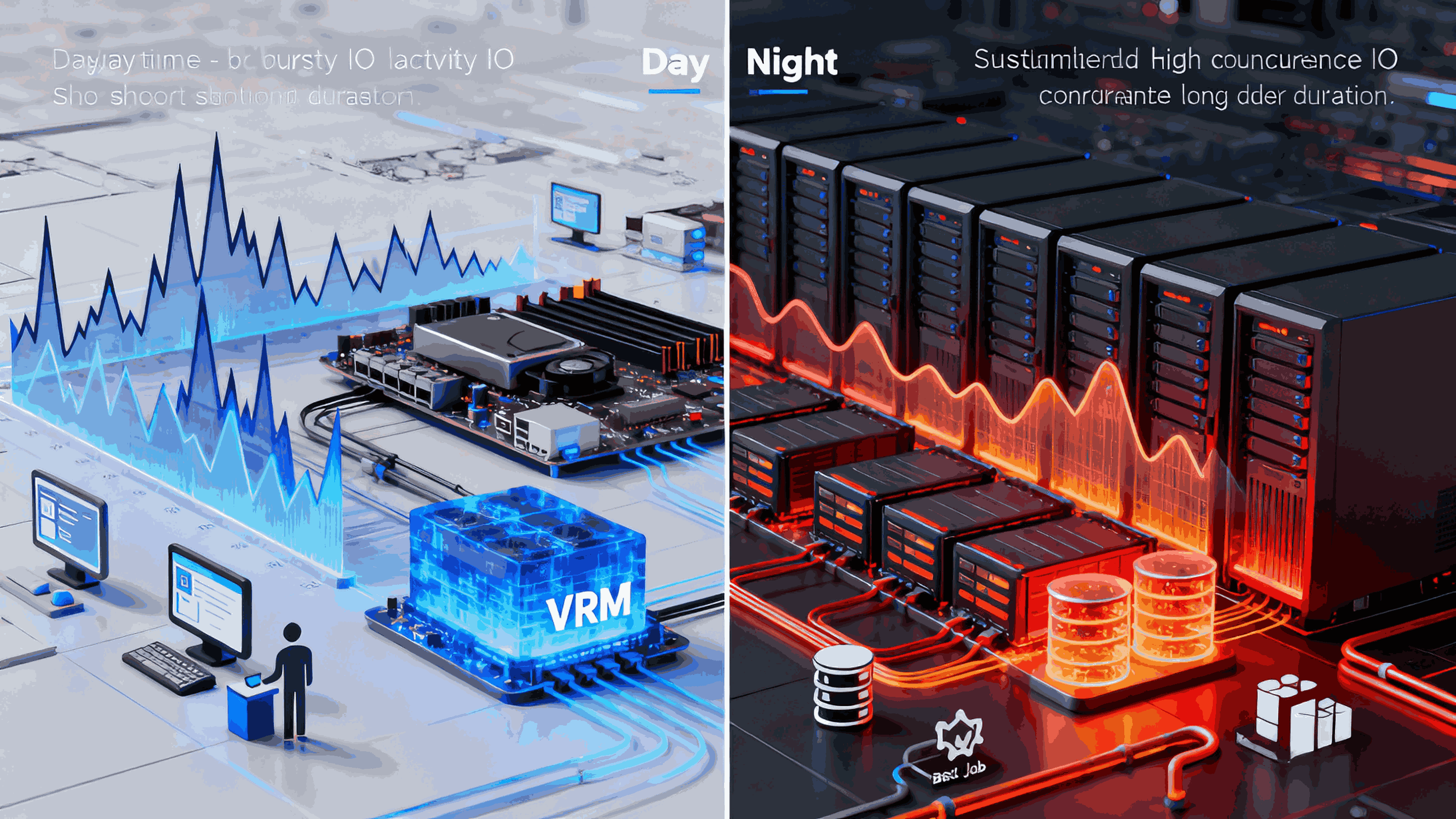

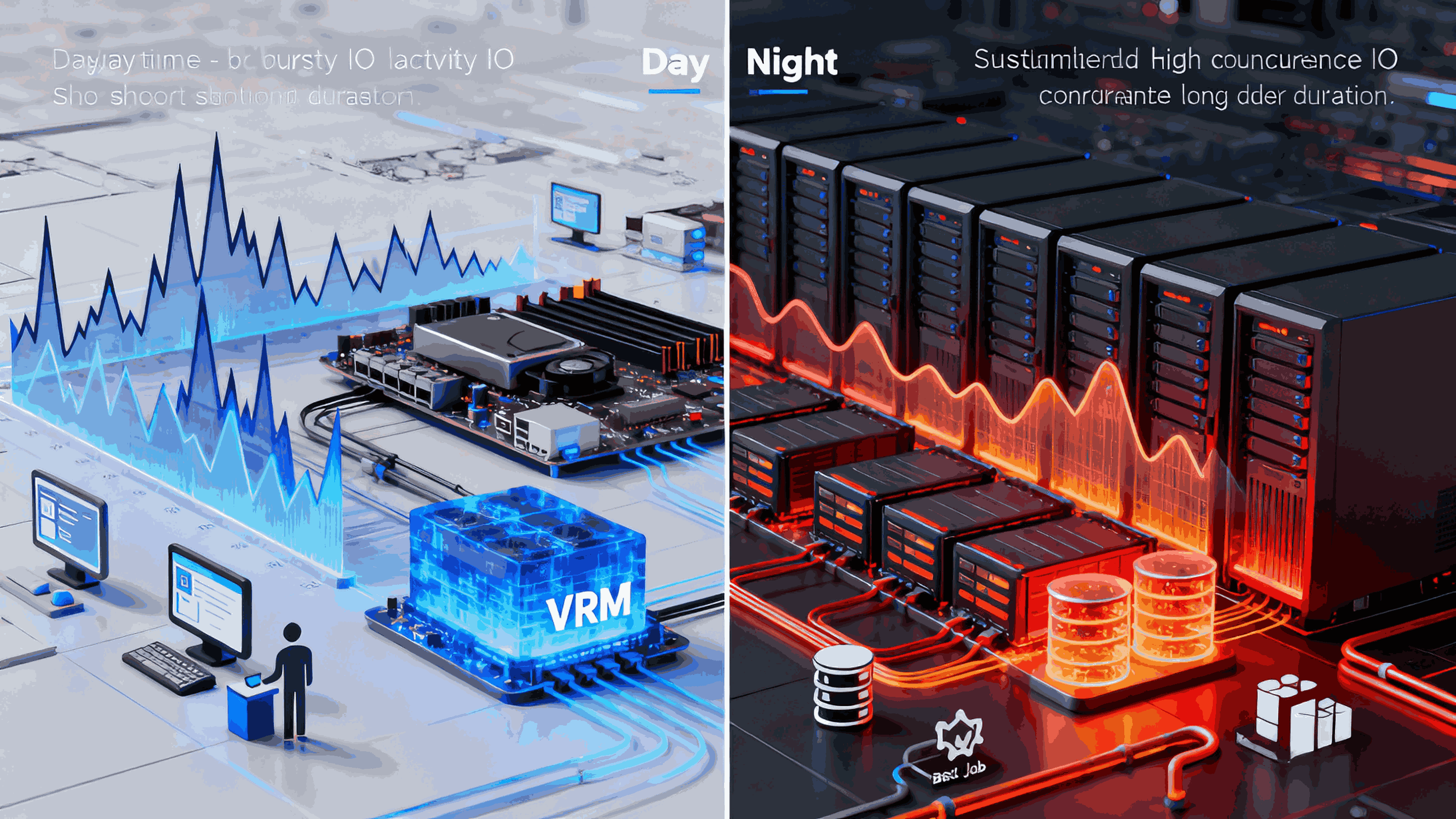

1. Why Night-Time Loads Trigger Failures

Most large-scale deployments run different workloads during daytime vs nighttime:

1.1. Batch Jobs, Backups & Reindexing

At night, clusters typically run:

These generate sustained, high-concurrency storage and network I/O, unlike human-driven daytime workloads.

1.2. Sustained I/O → Sustained Heat

Daytime traffic spikes are bursty.

Night-time batch workloads are duration-heavy, pushing:

NVMe SSDs to peak write loads

NICs to long-duration Tx/Rx queues

CPUs to long turbo intervals

VRMs to maximum duty cycles

Many failures appear only when the entire system stays hot for hours, not seconds.

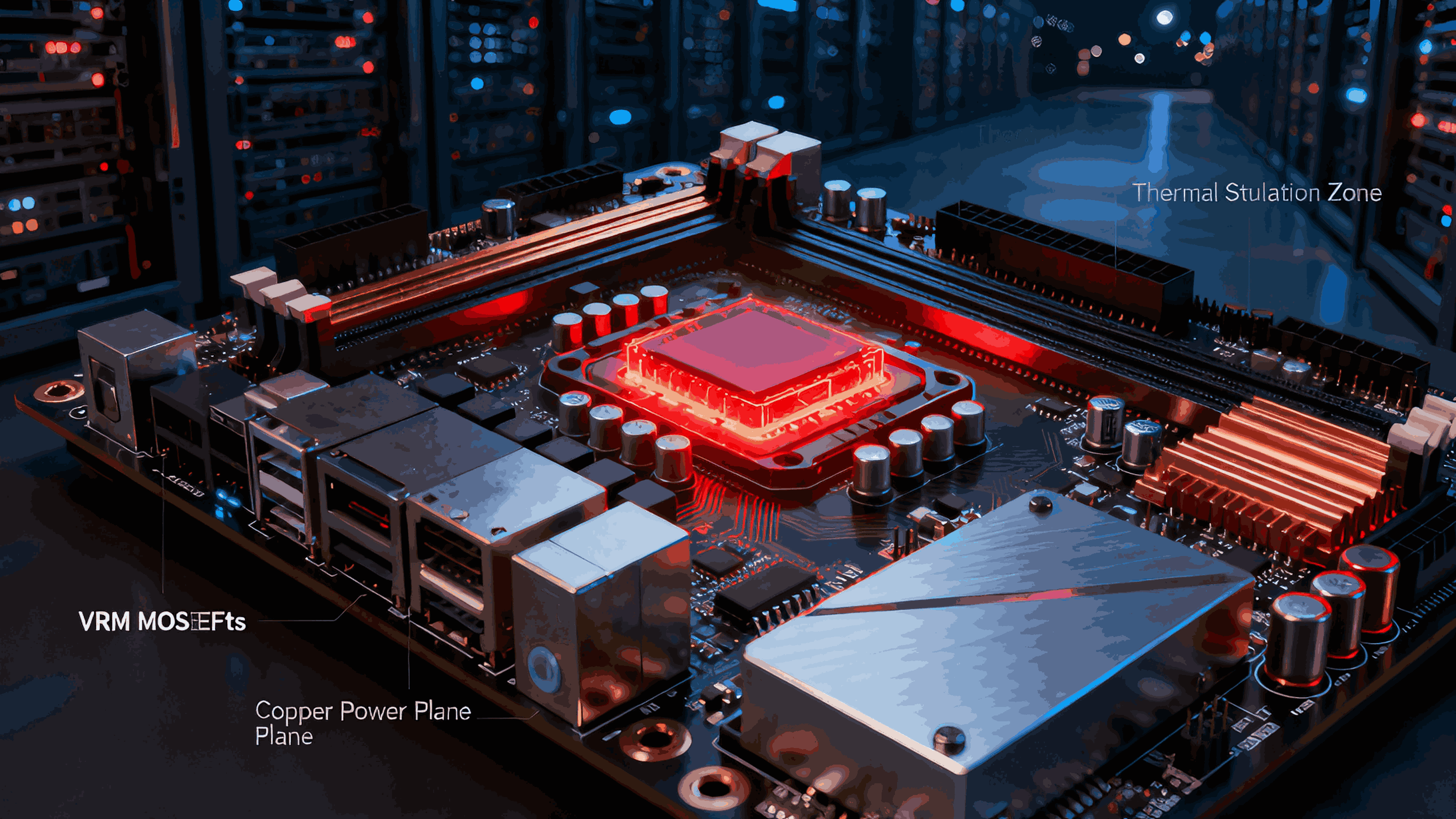

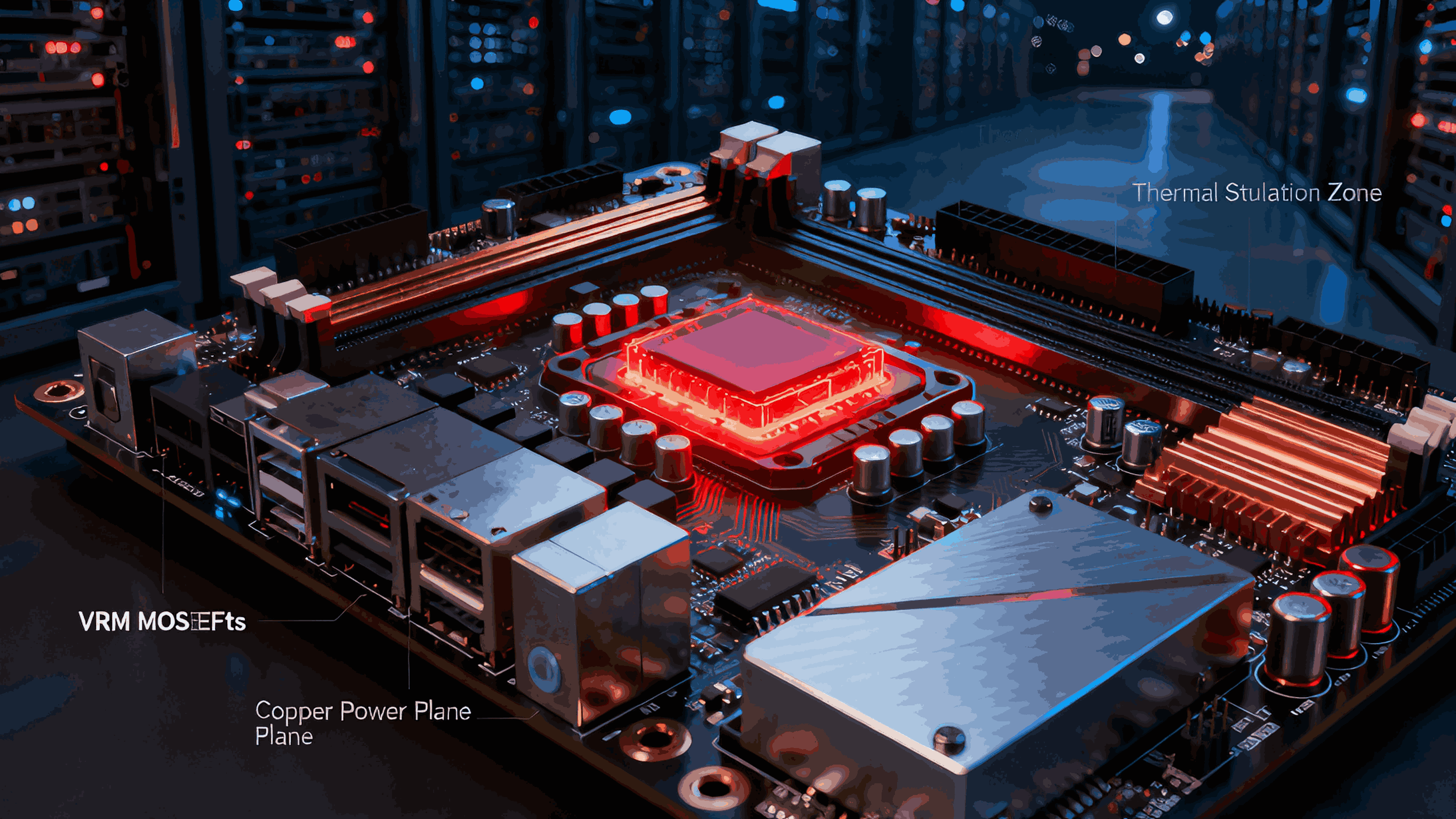

2. Thermal Saturation: The Silent Trigger of Night-Only Failures

Even a board with solid cooling may experience:

2.1. VRM Thermal Saturation

VRM MOSFETs and chokes heat slowly.

Under prolonged concurrency:

Once VRMs enter thermal saturation, CPU or NIC may experience:

Angxun advantage:

Our boards use independent CPU power stages, dual safety voltage devices, and zero-burn protection circuits to maintain voltage stability even under extended turbo workloads.

3. Turbo Behavior Causes Night-Time Instability

Servers tend to run higher turbo multipliers at night because:

This causes:

Turbo frequency + high I/O concurrency can expose marginal designs in:

PCB copper thickness

VRM cooling

Power plane distribution

SoC I/O bus timing

Angxun advantage:

We employ thick copper PCB plating, high-grade all-solid capacitors, and aluminum thermal bases to support stable turbo performance.

4. Storage & PCIe Behavior Under Night-Time I/O Flooding

4.1. NVMe Thermal Throttling

Heavy sustained writes cause NAND and controllers to:

4.2. PCIe Topology Stress

High concurrency stresses:

Switches

Retimers

Root complex allocation

IOMMU tables

Misaligned or congested topologies can cause:

4.3. RAID Rebuilds

Night-time automatic scrubs or rebuilds can cause:

5. Why Daytime Testing Never Catches These Issues

Because daytime testing is:

Short-duration

User-driven

Load-variable

Thermally intermittent

Night-time workloads are:

Long-duration

Constant

High concurrency

High thermal load

High VRM duty cycle

Engineers often test:

“Does the system crash under stress?”

They rarely test:

“Does the system crash after 3 hours of sustained, full-path I/O?”

PREVIOUS:Zero Failures in 5 Years: How We Helped an Industrial IoT Provider Deploy 10,000+ Nodes Successfully

NEXT:How to Reduce 80% of Server Deployment Debug Time

PREVIOUS:Zero Failures in 5 Years: How We Helped an Industrial IoT Provider Deploy 10,000+ Nodes Successfully

NEXT:How to Reduce 80% of Server Deployment Debug Time